Learning to Stylize Novel Views

Hsin-Ping Huang1 Hung-Yu Tseng1 Saurabh Saini2 Maneesh Singh2 Ming-Hsuan Yang1,3,4

1UC Merced 2Verisk Analytics 3Google Research 4Yonsei University

Abstract

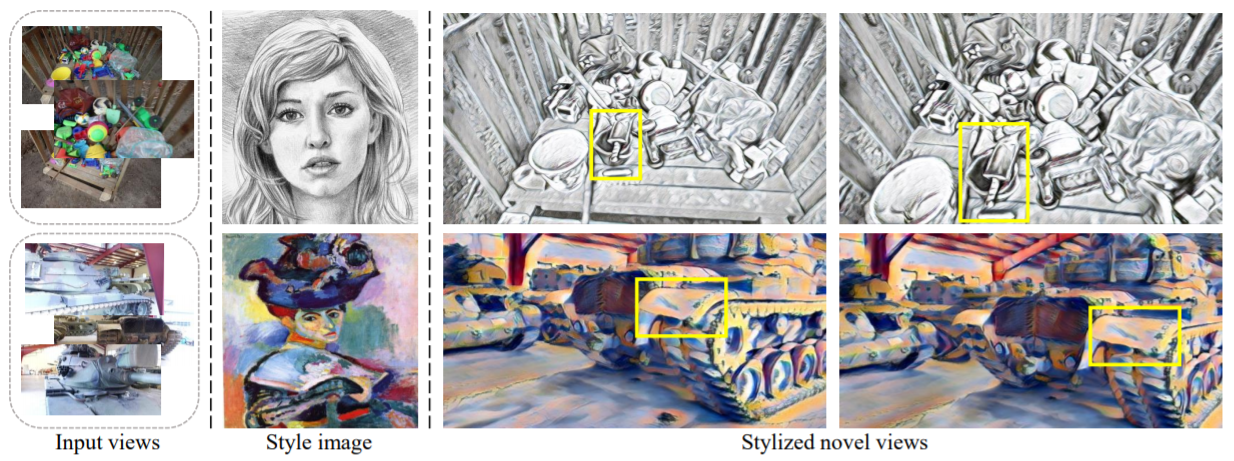

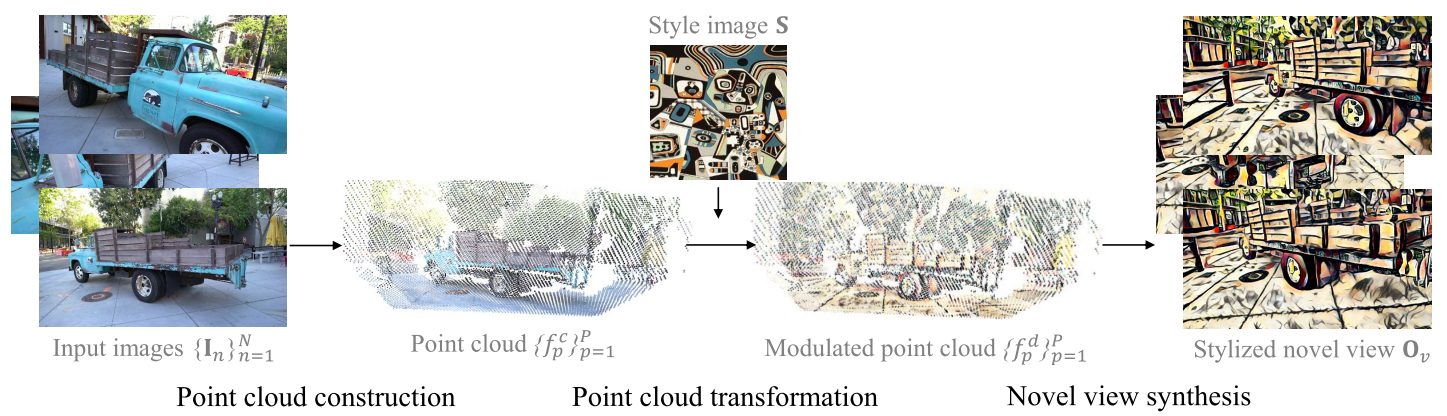

We tackle a 3D scene stylization problem --- generating stylized images of a scene from arbitrary novel views given a set of images of the same scene and a reference image of the desired style as inputs. Direct solution of combining novel view synthesis and stylization approaches lead to results that are blurry or not consistent across different views. We propose a point cloud-based method for consistent 3D scene stylization. First, we construct the point cloud by back-projecting the image features to the 3D space. Second, we develop point cloud aggregation modules to gather the style information of the 3D scene, and then modulate the features in the point cloud with a linear transformation matrix. Finally, we project the transformed features to 2D space to obtain the novel views. Experimental results on two diverse datasets of real-world scenes validate that our method generates consistent stylized novel view synthesis results against other alternative approaches.Overview

Results

Input images

SVS [1] → LST [2]

SVS [1] → TPFR [3]

SVS [1] → Compound [4]

Style image

SVS [1] → FMVST [5]

SVS [1] → MCC [6]

Ours

BibTeX

@article{huang_2021_3d_scene_stylization,

title = {Learning to Stylize Novel Views},

author={Huang, Hsin-Ping and Tseng, Hung-Yu and Saini, Saurabh and Singh, Maneesh and Yang, Ming-Hsuan},

journal = {arXiv preprint arXiv:2105.13509},

year={2021}

}

References

[1] Gernot Riegler and Vladlen Koltun. Stable View Synthesis. In CVPR, 2021.[2] Xueting Li, Sifei Liu, Jan Kautz, and Ming-Hsuan Yang. Learning Linear Transformations for Fast Arbitrary Style Transfer. In CVPR, 2019.

[3] Jan Svoboda, Asha Anoosheh, Christian Osendorfer, and Jonathan Masci. Two-Stage Peer-Regularized Feature Recombination for Arbitrary Image Style Transfer. In CVPR, 2020.

[4] Wenjing Wang, Jizheng Xu, Li Zhang, Yue Wang, and Jiaying Liu. Consistent Video Style Transfer via Compound Regularization. In AAAI, 2020.

[5] Wei Gao, Yijun Li, Yihang Yin, and Ming-Hsuan Yang. Fast Video Multi-Style Transfer. In WACV, 2020.

[6] Yingying Deng, Fan Tang, Weiming Dong, haibin Huang, Ma chongyang, and Changsheng Xu. Arbitrary Video Style Transfer via Multi-Channel Correlation. In AAAI, 2021.